基于Debian 12部署原生K8S集群

基于Debian 12部署原生K8S 集群

一、K8S集群主机准备

1.1 主机操作系统说明

| 序号 | 操作系统及版本 | 备注 |

|---|---|---|

| 1 | Debian 12 |

1.2 主机硬件配置说明

| 主机名 | 角色 | CPU | 内存 | 硬盘 |

|---|---|---|---|---|

| k8s-master01 | master | 2C | 2G | 20G |

| k8s-worker01 | worker(node) | 2C | 2G | 20G |

| k8s-worker02 | worker(node) | 2C | 2G | 20G |

1.3 主机配置

1.3.1 主机名配置

由于本次使用3台主机完成kubernetes集群配置,其中1台为master节点,名称为:k8s-master01;另外两台为worker节点,名称分别为:k8s-worker01、k8s-worker02

master节点:

hostnamectl set-hostname k8s-master01

worker01节点:

hostnamectl set-hostname k8s-worker01

worker02节点:

hostnamectl set-hostname k8s-worker02

1.3.2 网络配置

master01

编辑

/etc/network/interfaces文件:

nano /etc/network/interfaces

编辑以下内容:

# The loopback network interface

auto lo

iface lo inet loopback

# The primary network interface

auto eth0

iface eth0 inet static //设置静态IP

address 192.168.15.101 //IP地址

netmask 255.255.255.0 //子网掩码

gateway 192.168.15.2 //默认网关

dns-nameservers 8.8.8.8 //DNS服务器

重启网络服务

systemctl restart networking

worker01

编辑

/etc/network/interfaces文件:

nano /etc/network/interfaces

编辑以下内容:

# The loopback network interface

auto lo

iface lo inet loopback

# The primary network interface

auto eth0

iface eth0 inet static //设置静态IP

address 192.168.15.102 //IP地址

netmask 255.255.255.0 //子网掩码

gateway 192.168.15.2 //默认网关

dns-nameservers 8.8.8.8 //DNS服务器

重启网络服务

systemctl restart networking

worker02

编辑

/etc/network/interfaces文件:

nano /etc/network/interfaces

编辑以下内容:

# The loopback network interface

auto lo

iface lo inet loopback

# The primary network interface

auto eth0

iface eth0 inet static //设置静态IP

address 192.168.15.103 //IP地址

netmask 255.255.255.0 //子网掩码

gateway 192.168.15.2 //默认网关

dns-nameservers 8.8.8.8 //DNS服务器

重启网络服务

systemctl restart networking

1.3.3 主机名与IP地址解析

所有集群内主机均需要操作

cat >> /etc/hosts

192.168.15.101 k8s-master01

192.168.15.102 k8s-worker01

192.168.15.103 k8s-worker02

EOF

1.3.4 时间同步配置

查看时间

date

Thu Jan 8 04:03:35 PM CST 2025

更换时区

timedatectl set-timezone Asia/Shanghai

再次查看时间

date

Thu Jan 9 12:03:35 AM CST 2025

安装ntpdate

apt install -y ntpdate

使用ntpdate命令同步时间

ntpdate ntp.aliyun.com

通过计划任务实现定期自动同步时间

crontab -e

no crontab for root - using an empty one

Select an editor. To change later, run 'select-editor'.

1. /bin/nano <---- easiest

2. /usr/bin/vim.tiny

Choose 1-2 [1]: 1

------

0 0 * * * ntpdate ntp.aliyun.com

# crontab -l

......

# m h dom mon dow command

0 0 * * * ntpdate ntp.aliyun.com //每天0时0分自动运行时间同步命令

1.3.5 配置内核转发以及网桥过滤

所有集群内主机均需要操作

创建加载内核模块文件

cat << EOF | tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

本次执行,手动加载此模块

modprobe overlay

modprobe br_netfilter

查看已加载的模块

lsmod | egrep "overlay"

overlay 163840 0

lsmod | egrep "br_netfilter"

br_netfilter 36864 0

bridge 311296 1 br_netfilter

添加网桥过滤及内核转发配置文件

cat << EOF | tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

加载内核参数

sysctl --system

1.3.6 安装ipset及ipvsadm

所有集群内主机均需要操作

安装ipset及ipvsadm

apt install -y ipset ipvsadm

配置ipvsadm模块加载,添加需要加载的模块

cat << EOF | tee /etc/modules-load.d/ipvs.conf

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack

EOF

创建加载模块脚本文件

cat << EOF | tee ipvs.sh

#!/bin/sh

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

执行脚本文件,加载模块

sh ipvs.sh

1.3.7关闭SWAP分区

修改完成后需要重新启动操作系统,如不重启,可临时关闭,命令为swapoff -a

永久关闭swap分区,需要重新启动操作系统

如需要持久化地关闭 swap,则需要防止重启后 swap 分区的自动挂载。

Debian 默认使用 swap 分区而不是 swap 文件,且使用 UUID 进行挂载。

编辑 /etc/fstab,将 swap 分区挂载相关的行加上注释符号 # 即可。

# nano /etc/fstab

......

# <file system> <mount point> <type> <options> <dump> <pass>

# / was on /dev/sda2 during installation

UUID=f301ba2f-b8a3-4a58-bb6c-23a20ee16f9a / ext4 errors=remount-ro 0 1

# /boot/efi was on /dev/sda1 during installation

UUID=CD2C-D5DB /boot/efi vfat umask=0077 0 1

# swap was on /dev/sda3 during installation

#UUID=6e04859e-e7b2-44d1-af7e-64dda10f2d02 none swap sw 0 0 //注释swap

/dev/sr0 /media/cdrom0 udf,iso9660 user,noauto 0 0

Debian 默认使用 systemd 接管 swap 的挂载,还需对 systemd 相关的设置进行修改。

# systemctl --type swap --all

UNIT LOAD ACTIVE>

dev-disk-by\x2did-scsi\x2d0QEMU_QEMU_HARDDISK_drive\x2dscsi0\x2dpart3.swap loaded active>

dev-disk-by\x2dpartuuid-a6f4e7e1\x2dd1aa\x2d462c\x2dae52\x2d2deb26d81bf0.swap loaded active>

dev-disk-by\x2dpath-pci\x2d0000:01:01.0\x2dscsi\x2d0:0:0:0\x2dpart3.swap loaded active>

dev-disk-by\x2duuid-6e04859e\x2de7b2\x2d44d1\x2daf7e\x2d64dda10f2d02.swap loaded active>

dev-sda3.swap loaded active>

LOAD = Reflects whether the unit definition was properly loaded.

ACTIVE = The high-level unit activation state, i.e. generalization of SUB.

SUB = The low-level unit activation state, values depend on unit type.

5 loaded units listed.

To show all installed unit files use 'systemctl list-unit-files'.

lines 1-12/12 (END)

# systemctl mask dev-sda3.swap //关闭swap相关项

二、K8S集群容器运行时Containerd准备

2.1 Containerd部署文件获取

下载指定版本containerd

wget https://github.com/containerd/containerd/releases/download/v1.7.24/cri-containerd-1.7.24-linux-amd64.tar.gz

解压安装

tar xf cri-containerd-1.7.24-linux-amd64.tar.gz -C /

2.2Containerd配置文件生成并修改

创建配置文件目录

mkdir /etc/containerd

生成配置文件

containerd config default > /etc/containerd/config.toml

修改第67行

nano /etc/containerd/config.toml

sandbox_image = "registry.k8s.io/pause:3.9" //由3.8修改为3.9

如果使用阿里云容器镜像仓库,也可以修改为:

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.9" //由3.8修改为3.9

修改第139行

nano /etc/containerd/config.toml

SystemdCgroup = true //由false修改为true

2.3 Containerd启动及开机自启动

设置开机自启动并现在启动

systemctl enable --now containerd

验证其版本

containerd --version

三、K8S集群部署

3.1 K8S集群软件apt源准备

此处使用Kubernetes社区软件源仓库

3.1.1 更新 apt 包索引并安装使用 Kubernetes apt 仓库所需要的包:

sudo apt-get update

# apt-transport-https 可能是一个虚拟包(dummy package);如果是的话,你可以跳过安装这个包

sudo apt-get install -y apt-transport-https ca-certificates curl gpg

3.1.2 下载用于 Kubernetes 软件包仓库的公共签名密钥

所有仓库都使用相同的签名密钥,因此你可以忽略URL中的版本:

# 如果 `/etc/apt/keyrings` 目录不存在,则应在 curl 命令之前创建它,请阅读下面的注释。

# sudo mkdir -p -m 755 /etc/apt/keyrings

# k8s社区

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.31/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

3.1.3 添加 Kubernetes apt 仓库。

请注意,此仓库仅包含适用于 Kubernetes 1.31 的软件包; 对于其他 Kubernetes 次要版本,则需要更改 URL 中的 Kubernetes 次要版本以匹配你所需的次要版本 (你还应该检查正在阅读的安装文档是否为你计划安装的 Kubernetes 版本的文档)。

此操作会覆盖 /etc/apt/sources.list.d/kubernetes.list 中现存的所有配置。

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.31/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

3.1.4 更新 apt 包索引

sudo apt-get update

3.2 K8S集群软件安装及kubelet配置

所有节点均可安装

3.2.1 k8s集群软件安装

查看软件列表

apt-cache policy kubeadm

kubeadm:

Installed: (none)

Candidate: 1.31.0-1.1

Version table:

1.31.0-1.1 500

500 https://pkgs.k8s.io/core:/stable:/v1.31/deb Packages

查看软件列表及其依赖关系

apt-cache showpkg kubeadm

查看可用软件列表

apt-cache madison kubeadm

默认安装

sudo apt-get install -y kebelet kubeadm kubectl

安装指定版本

sudo apt-get install -y kebelet=1.31.0-1.1 kubeadm=1.31.0-1.1 kubectl=1.31.0-1.1

如有报错

锁定版本,防止后期自动更新

sudo apt-mark hold kubelet kubeadm kubectl

解锁版本,可以执行更新

sudo apt-mark unhold kubelet kubeadm kubectl

3.2.2 配置kubelet

为了实现容器运行时使用的cgroupdriver与kubelet使用的cgroup的一致性,建议修改如下文件内容

vim /etc/default/kubelet

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

设置kubelet为开机自启动即可,由于没有生成配置文件,集群初始化后自动启动

systemctl enable kubelet

3.3 K8S集群初始化

3.3.1 查看版本

kubeadm version

3.3.2 生成部署配置文件

kubeadm config print init-defaults > kubeadm-config.yaml

使用kubernetes社区版容器镜像仓库

vim kubeadm-config.yaml

cat kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta4

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.15.101

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

imagePullSerial: true

name: k8s-master01

taints: null

timeouts:

controlPlaneComponentHealthCheck: 4m0s

discovery: 5m0s

etcdAPICall: 2m0s

kubeletHealthCheck: 4m0s

kubernetesAPICall: 1m0s

tlsBootstrap: 5m0s

upgradeManifests: 5m0s

---

apiServer: {}

apiVersion: kubeadm.k8s.io/v1beta4

caCertificateValidityPeriod: 87600h0m0s

certificateValidityPeriod: 8760h0m0s

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

encryptionAlgorithm: RSA-2048

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.k8s.io

kind: ClusterConfiguration

kubernetesVersion: 1.31.0

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16

proxy: {}

scheduler: {}

3.3.3查看并下载镜像

kubeadm config images list

kubeadm config images pull

3.3.4 使用部署配置文件初始化K8S集群

kubeadm init --config kubeadm-config.yml --upload-certs --v=9

3.3.5 工作节点加入master节点

kubeadm join 192.168.15.101:6443 --token 0zw6m1.xhdu16hn1chkohb1 --discovery-token-ca-cert-hash sha256:fc70b4fc3ccf2e4de64afa2d70fd71cd7dcc1f7caf541022c002b531abb10f29

四、K8S集群网络插件calico部署

calico访问链接:https://docs.tigera.io/calico/latest/about/

4.1 部署tigera-operator

4.1.1 使用官方命令部署tigera-operator

root@k8s-master01:~# kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.29.2/manifests/tigera-operator.yaml

4.1.2 查看命名空间

root@k8s-master01:~# kubectl get ns

NAME STATUS AGE

default Active 3d

kube-node-lease Active 3d

kube-public Active 3d

kube-system Active 3d

tigera-operator Active 22h #tigera-operator已部署

4.2 部署calico

4.2.1 下载对应yaml文件

root@k8s-master01:~# wget https://raw.githubusercontent.com/projectcalico/calico/v3.29.2/manifests/custom-resources.yaml

4.2.2 修改该yaml文件中cidr地址

# This section includes base Calico installation confi>

# For more information, see: https://docs.tigera.io/ca>

apiVersion: operator.tigera.io/v1

kind: Installation

metadata:

name: default

spec:

# Configures Calico networking.

calicoNetwork:

ipPools:

- name: default-ipv4-ippool

blockSize: 26

cidr: 10.244.0.0/16 #修改此处为集群podSubnet

encapsulation: VXLANCrossSubnet

natOutgoing: Enabled

nodeSelector: all()

---

# This section configures the Calico API server.

# For more information, see: https://docs.tigera.io/ca>

apiVersion: operator.tigera.io/v1

kind: APIServer

metadata:

name: default

spec: {}

4.2.3 根据修改后的yaml文件部署calico

root@k8s-master01:~# kubectl create -f custom-resources.yaml

4.2.4 查看命名空间

root@k8s-master01:~# kubectl get ns

NAME STATUS AGE

calico-apiserver Active 22h

calico-system Active 22h

default Active 3d

kube-node-lease Active 3d

kube-public Active 3d

kube-system Active 3d

tigera-operator Active 22h

4.2.5 查看相关pod是否运行

root@k8s-master01:~# watch kubectl get pods -n calico-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-58b4967c4d-6v82h 1/1 Running 0 22h

calico-node-7sg2x 1/1 Running 0 22h

calico-node-g5mhm 1/1 Running 0 22h

calico-node-p7m2x 1/1 Running 0 22h

calico-typha-7d49d8f8c8-5n5mj 1/1 Running 0 22h

calico-typha-7d49d8f8c8-r8q5t 1/1 Running 0 22h

csi-node-driver-77zfk 2/2 Running 0 22h

csi-node-driver-qlswk 2/2 Running 0 22h

csi-node-driver-wldxj 2/2 Running 0 22h

4.2.6 查看集群节点状态

root@k8s-master01:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready control-plane 3d v1.31.0

k8s-worker01 Ready <none> 3d v1.31.0

k8s-worker02 Ready <none> 3d v1.31.0

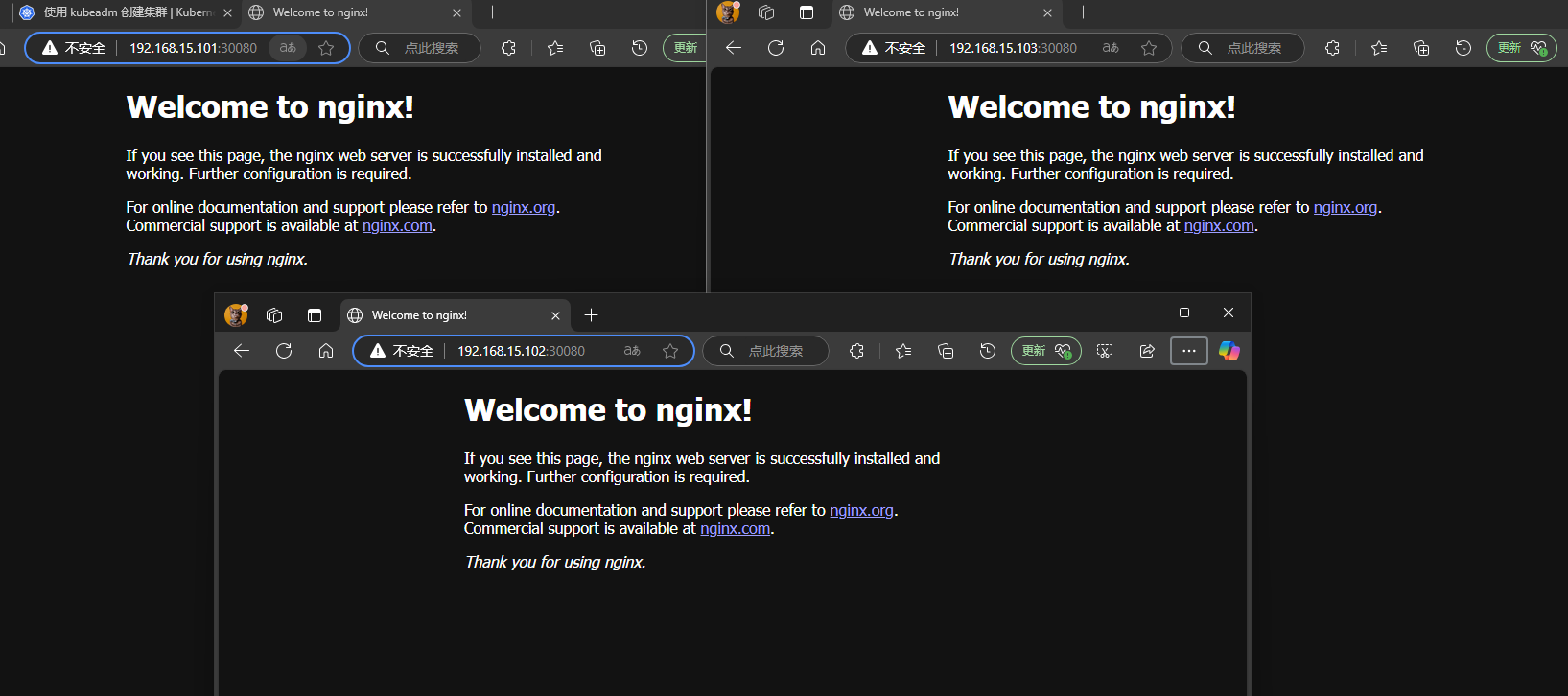

五、部署Nginx应用验证K8S集群可用性

5.1 部署Nginx

5.1.1 创建yam配置文件

root@k8s-master01:~# nano nginx.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginxweb

spec:

selector:

matchLabels:

app: nginxweb1

replicas: 2

template:

metadata:

labels:

app: nginxweb1

spec:

containers:

- name: nginxwebc

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginxweb-service

spec:

externalTrafficPolicy: Cluster

selector:

app: nginxweb1

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 30080

type: NodePort

5.1.2 通过yaml文件部署Nginx

root@k8s-master01:~# kubectl apply -f nginx.yaml

---

deployment.apps/nginxweb created

service/nginxweb-service created

5.1.3 查看deployment

root@k8s-master01:~# kubectl get deployment

---

NAME READY UP-TO-DATE AVAILABLE AGE

nginxweb 2/2 2 2 32s

5.1.4 查看pods

root@k8s-master01:~# kubectl get pods

---

NAME READY STATUS RESTARTS AGE

nginxweb-b4ccbf5dc-bvd25 1/1 Running 0 34s

nginxweb-b4ccbf5dc-wt74z 1/1 Running 0 34s

5.1.5 集群内访问

curl http://10.99.64.212

---

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

5.1.6 集群外访问